In Blog, Cases, Design & Product, Ideas, User panel and feedback, User Research

Autonomous- & democratized user testing

Nowadays most organizations work agile, meaning there is not much time to conduct traditional user testing, and many organizations are looking for new ways of approaching user testing.

We simply do not have time for extensive in-person, think-aloud tests, with time-consuming analysis and report writing (which we know no one ever reads). By the time we are done, the development team is long gone, working on something else, and the product or feature has been shipped, without any iterations.

Throughout my career, I have worked a lot with agile UX, and it still puzzles me to see the approach we as UX practitioners often apply to user testing. There still seems to be a consensus that in order to conduct ‘real’ and valid user testing, the tests need to be conducted as an in-person (and very often) think-aloud test.

Please, do not misunderstand me. This type of testing definitely has its place, however not for every user testing scenario. It seems that this is the default approach no matter what is being tested, no matter where we are in the development process, or no matter which type of insights or results we need in order to make informed decisions about our design. And often this approach is what is stopping user testing, simply because it takes too long from planning to insights.

I am not here to shame in-person nor think-aloud user tests. But I want to advocate for a more strategic approach to user testing, instead of this ‘one type fits all’-approach. Working in agile environments, we need to be able to test fast and often. My dream is that we start working with user testing the same way as we work with software testing – continuously. This is something we have been discussing for a while and we have come up with what we currently call autonomous user testing.

Autonomous user testing

The reality of traditional user testing methods is that they are very difficult to incorporate into agile development since they simply take too much time to conduct and analyze. Often we do not need the level of detail we get from them. What we often need is a top five of the worst usability issues, because that is what we have time and resources to fix in the next sprint. Again, I want to emphasize that sometimes we need more in-depth work – either at the beginning of a project or when we see that something is not performing as it should, and we cannot pinpoint the root cause. However, often we (only) need to know how our design performs if users understand it and can use it and that we have not overlooked crucial use errors.

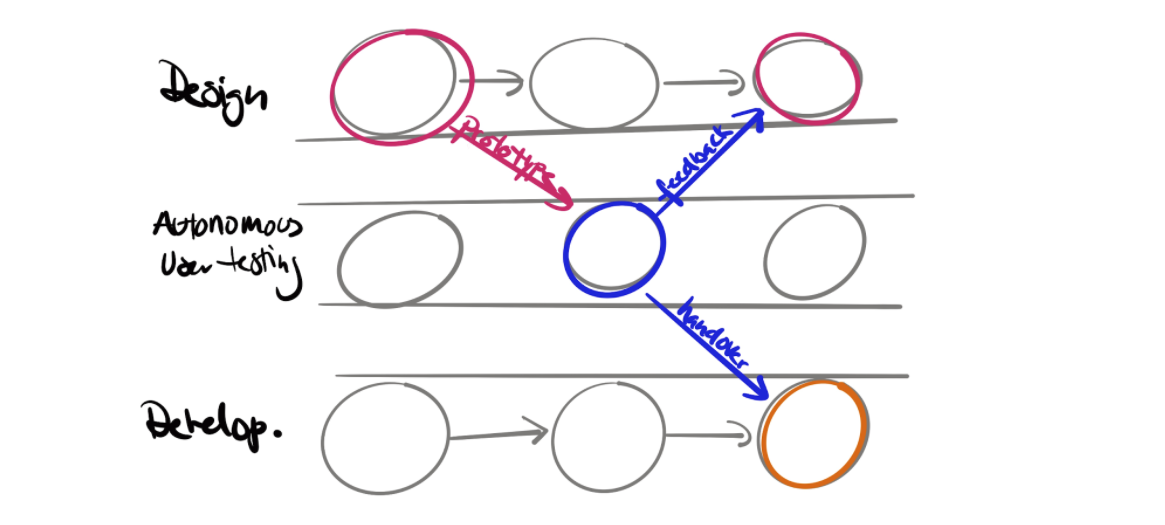

I represent Preely and as you might know, Preely is a remote, unmoderated user testing platform. By being unmoderated, we can actually introduce the platform as an extra hand for user testing, and thereby introduce a continuous user testing framework. This is done by adding an extra track to development, the autonomous user testing track (see model).

Autonomous user testing track

We imagine that the UX designer sets up an unmoderated test at the end of a sprint, goes to work on something else in the upcoming sprint, and then returns to the test to harvest results and insights. If everything is working as intended, the design will go into production. If not, we will make another iteration of the design. This type of track does not take much time, since the UX designer sets up the test once and then can test with 10, 100, or 1,000 participants – the effort spent is the same (see The Price of User Testing). The platform facilitates the user tests and makes sure it is easy to interpret the insights and results. The UX designer thereby has time to look into more in-depth user research or more in-depth user testing and still has the valuable insights of continuous user testing.

An additional gain of working with an autonomous user testing track is that it can help democratize user testing.

Democratized user testing

There seems to be a tendency to look at user involvement and -testing as something you need to be initiated in before you are allowed to conduct it. This is something I worked a lot with during my Ph.D. Here I worked with how to enable software teams, who worked on embedded software for medical devices, in how to facilitate user involvement and -tests on their own within development sprints. Having non-practitioners conduct this type of work is often referred to as ‘democratized user research’. During this work it became evident that we need light versions of the academic methods, so they suit the industry, and that non-practitioners are very keen on working from a template point of view. The beauty of a remote, unmoderated platform, such as Preely is that it actually supports both. Such platforms are built to cater to a wide range of users, not only UX professionals, hence there is a lot of guidance and template-based approaches to help set up a user test. In addition, by being unmoderated a lot of the conditions can be kept constant, which is something that can be very difficult – especially if you are not trained in it.

Of course, in the ideal world, where time and resources are unlimited, all of us want to sit together with our users. But many of us are facing another reality and I definitely believe that an autonomous- and maybe democratized user testing approach can help us free up time and resources, which in the end might lead to the opportunity of actually spending more time with our users.