Test: Usability test

The tasks can be very specific (e.g. You are visiting our website for the first time, you have some questions regarding our services and want to contact us, find our email address) or very open (e.g. One of your friends has sent you a link to a website, open the link and take a look around), depending on the assumptions you are testing.

With a usability test you can identify:

- Problems in your solution/product/service

- Areas of improvements in your solution/product/service

- Your participant’s mental models of your solution/product/service

Unmoderated test

You can use Preely to conduct a remote, unmoderated usability test. When you do this Preely is the test facilitator. This means that all the things you normally do during a face-to-face, moderated test are done by the platform. We therefore ask you to set up tasks and ask questions via the platform.

Moderated test

Preely can also be used for both remote, moderated user tests and face-to-face moderated tests. In these cases the platform can be used to collect performance data (time on task, clicks, error rate, etc.), and you’ll be the facilitator.

Qualitative, quantitative or mixed methods

Data from a usability test can either be quantitative or qualitative. In Preely we automatically collect performance data during your test e.g. time on task, success rate (see Metrics). When you set up your test, you can ask questions throughout the test giving you different types of self-reported data e.g. insights into what they think about your solution, which design they prefer and why.

Mixed methods give you both qualitative and quantitive data. As an example you can have quotes from openened questions supporting the ‘hard numbers’. Preely is a great tool for a mixed methods usability tests. When working with mixed methods it is important to notice that you need to follow the paradigm of quantitative tests, be very consistent regarding conditions, adhere closely to the plan and not change your test design. Since Preely is a platform for remote, unmoderated tests, you’ll not have bias from a facilitator.

Step-by-step guide to setting up a usability test in Preely

Click “Create test” and choose device.

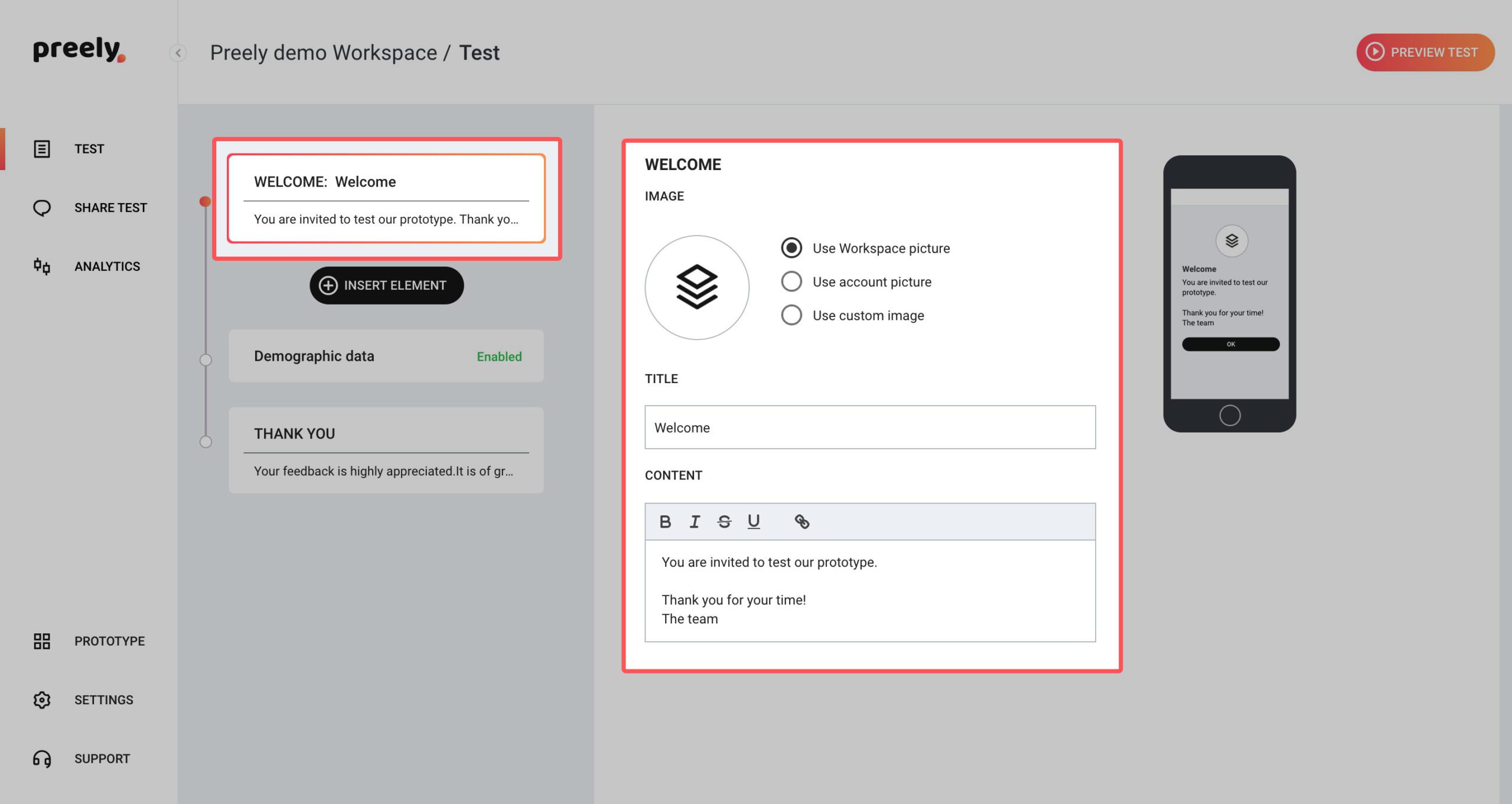

1. Welcome your participants

We have written a short welcome to your participants. If you want to change it, just write your own personal welcome.

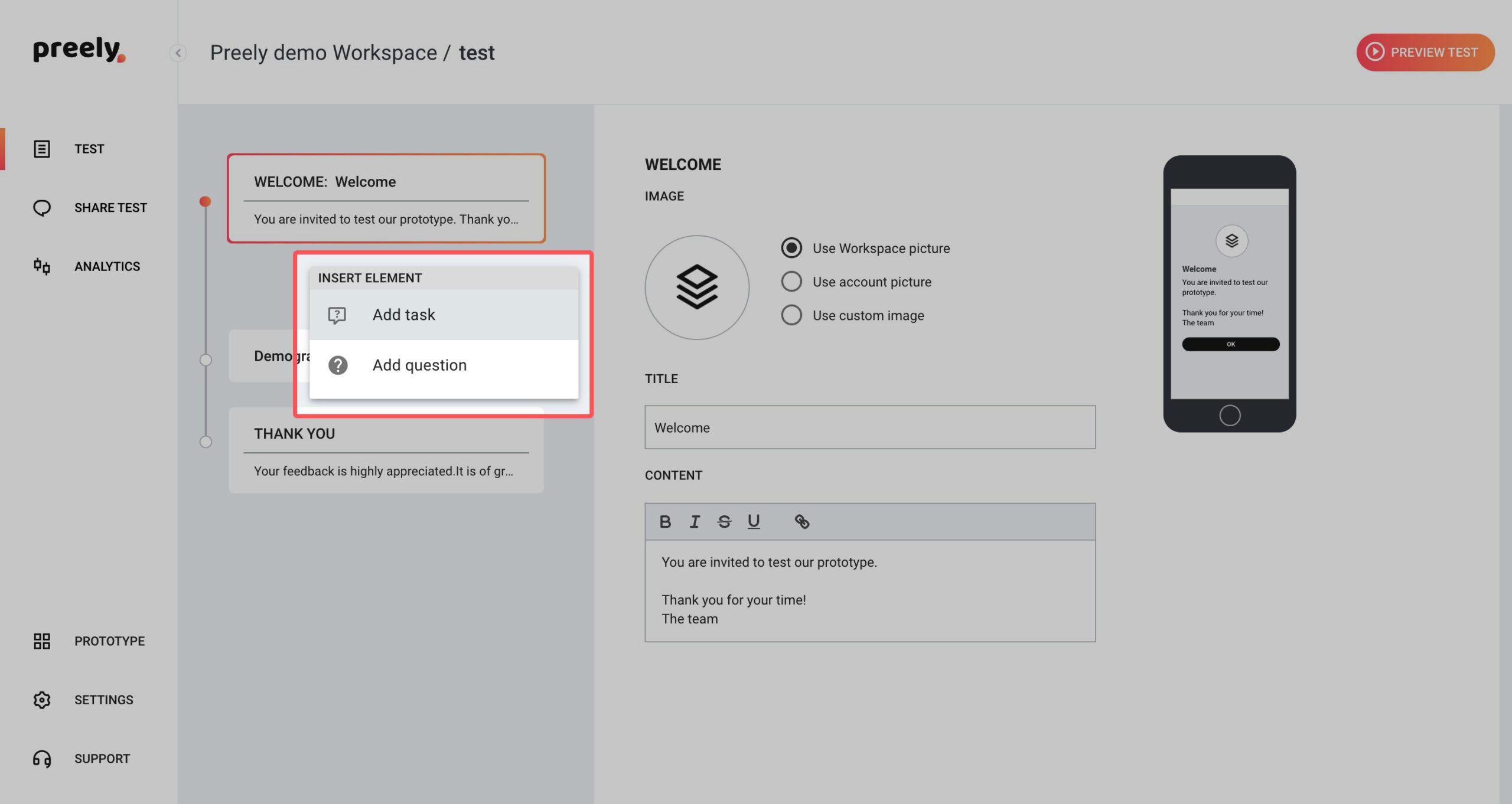

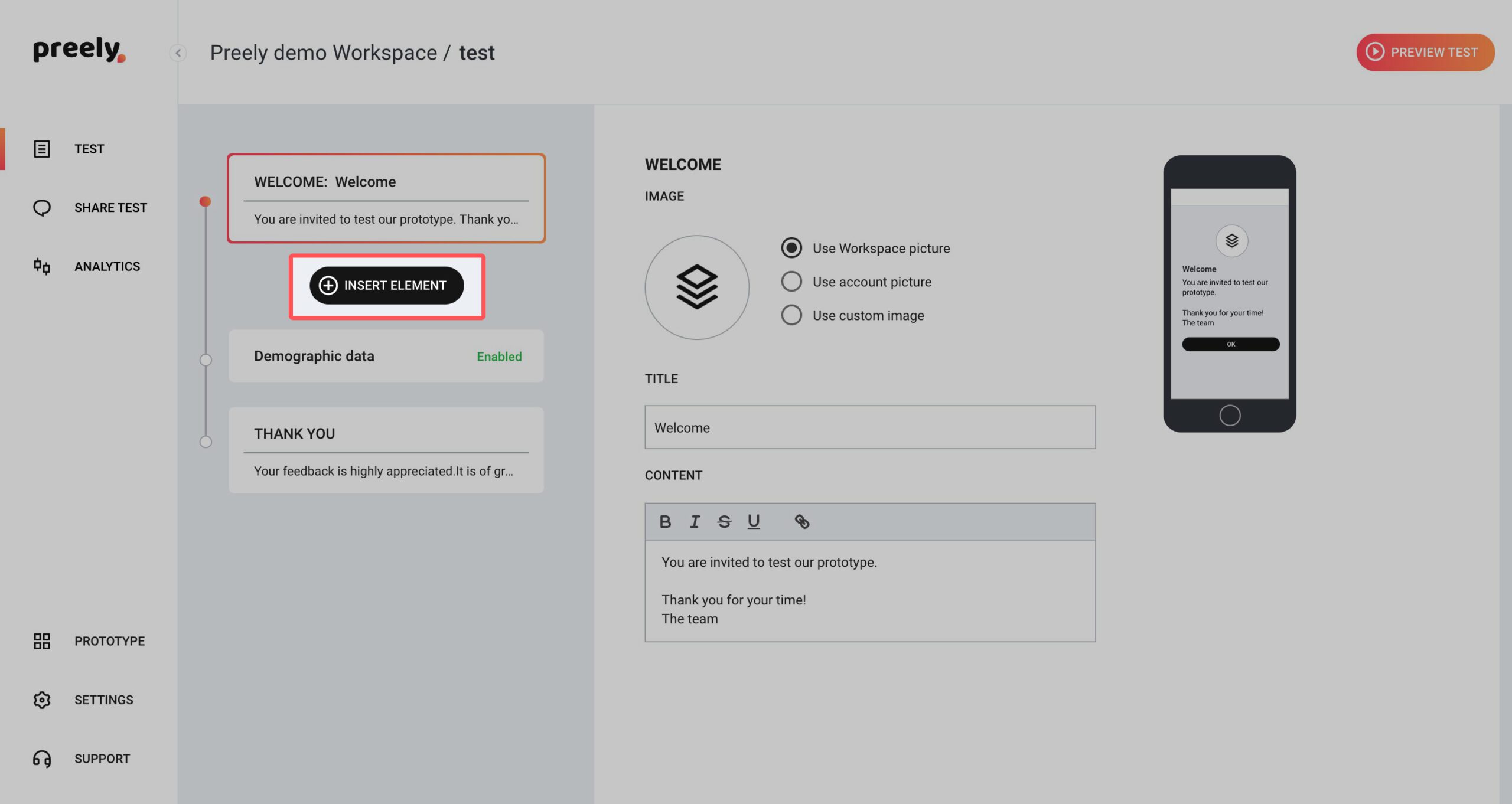

2. Add tasks and questions

This is where you truly set up your test. Click ‘Insert element’ and create different tasks and ask your participants questions.

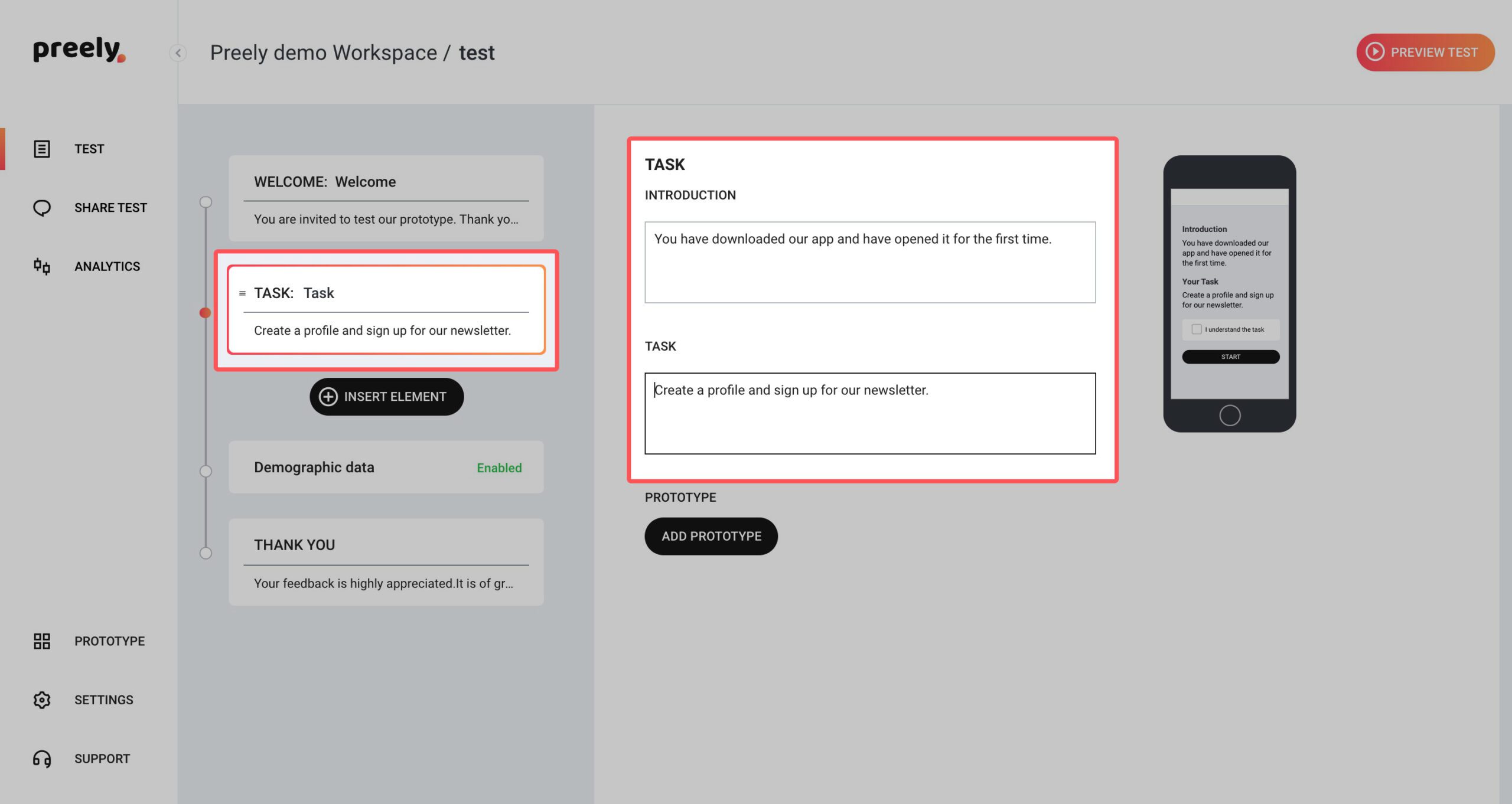

2.1 Tasks

When you want your participants to perform a task in your test, you add a task to your test. You write an introduction and then you formulate the task. Try to break down your tasks into small chunks.

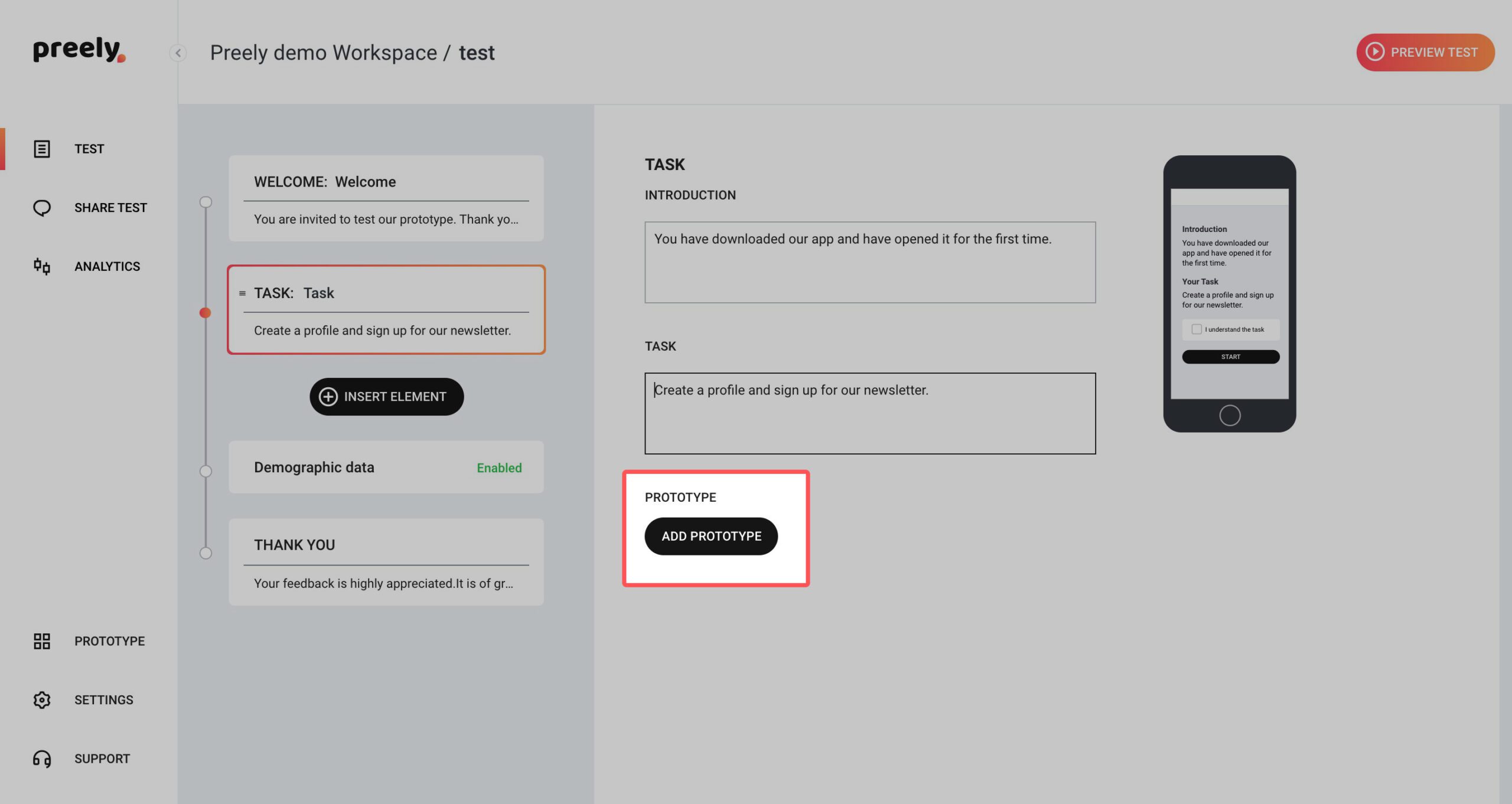

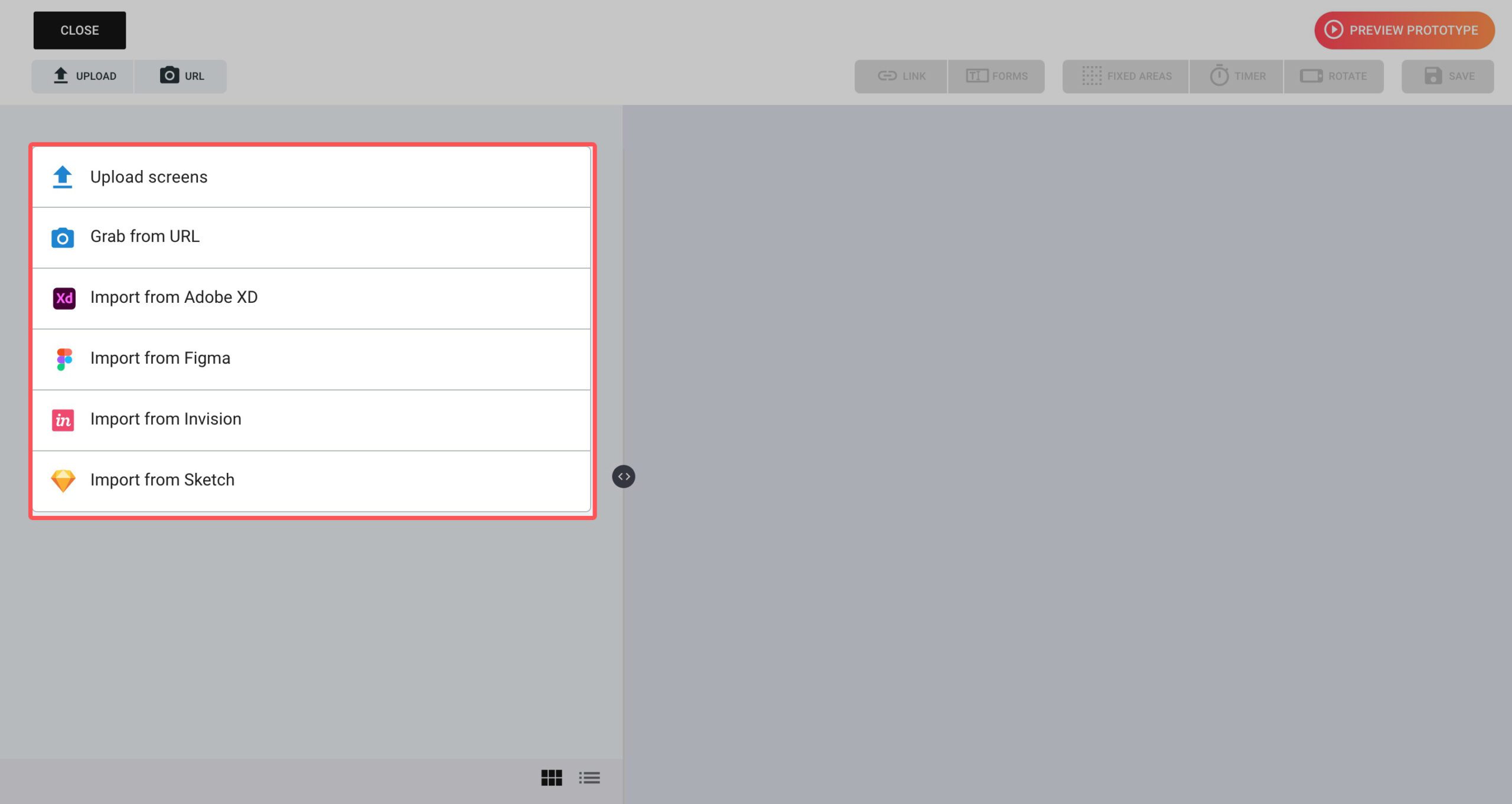

2.2 Add prototype

Click Add prototype and upload your design(s), screenshot, Adobe XD-, Figma- or Sketch artboards.

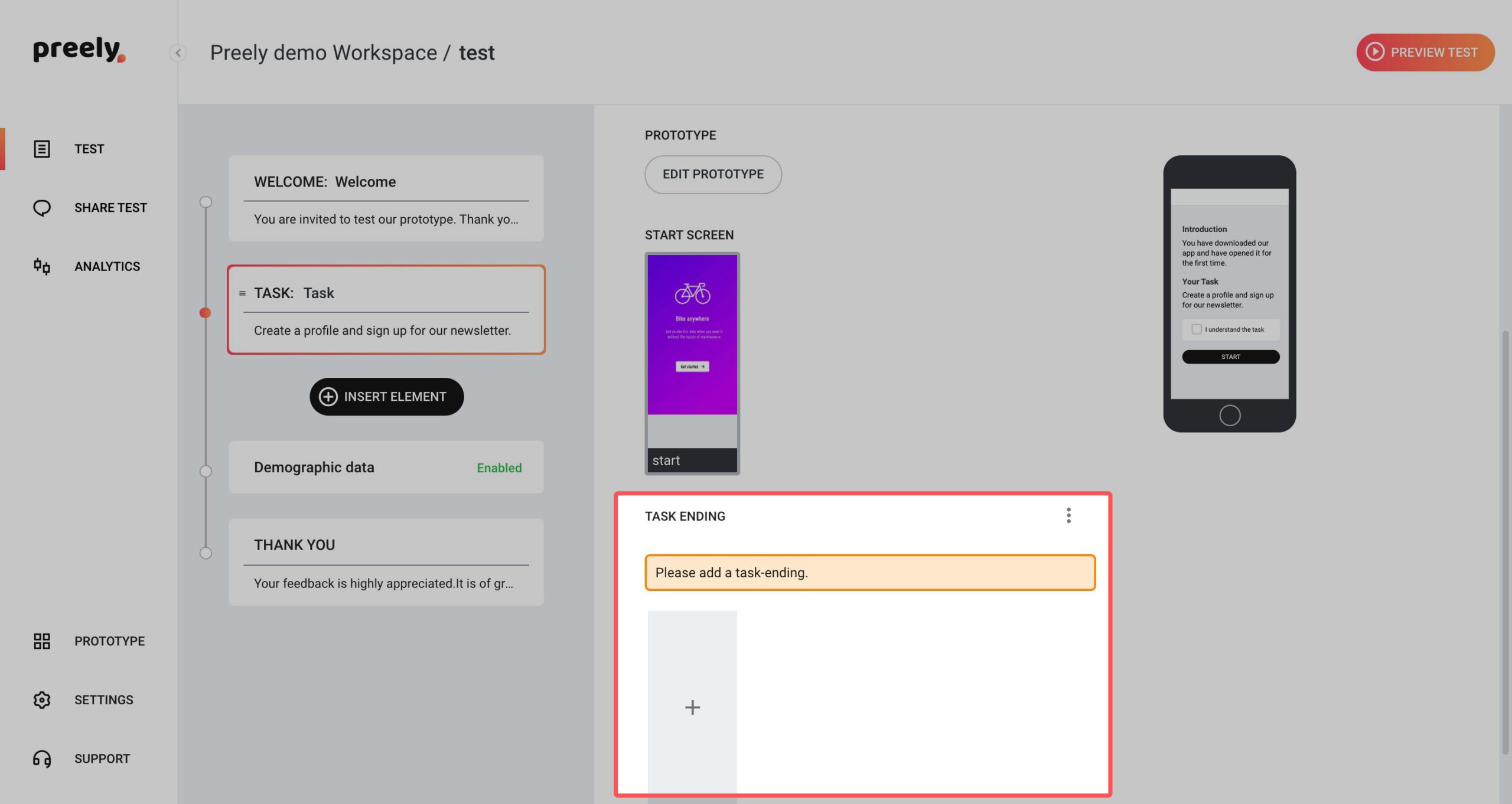

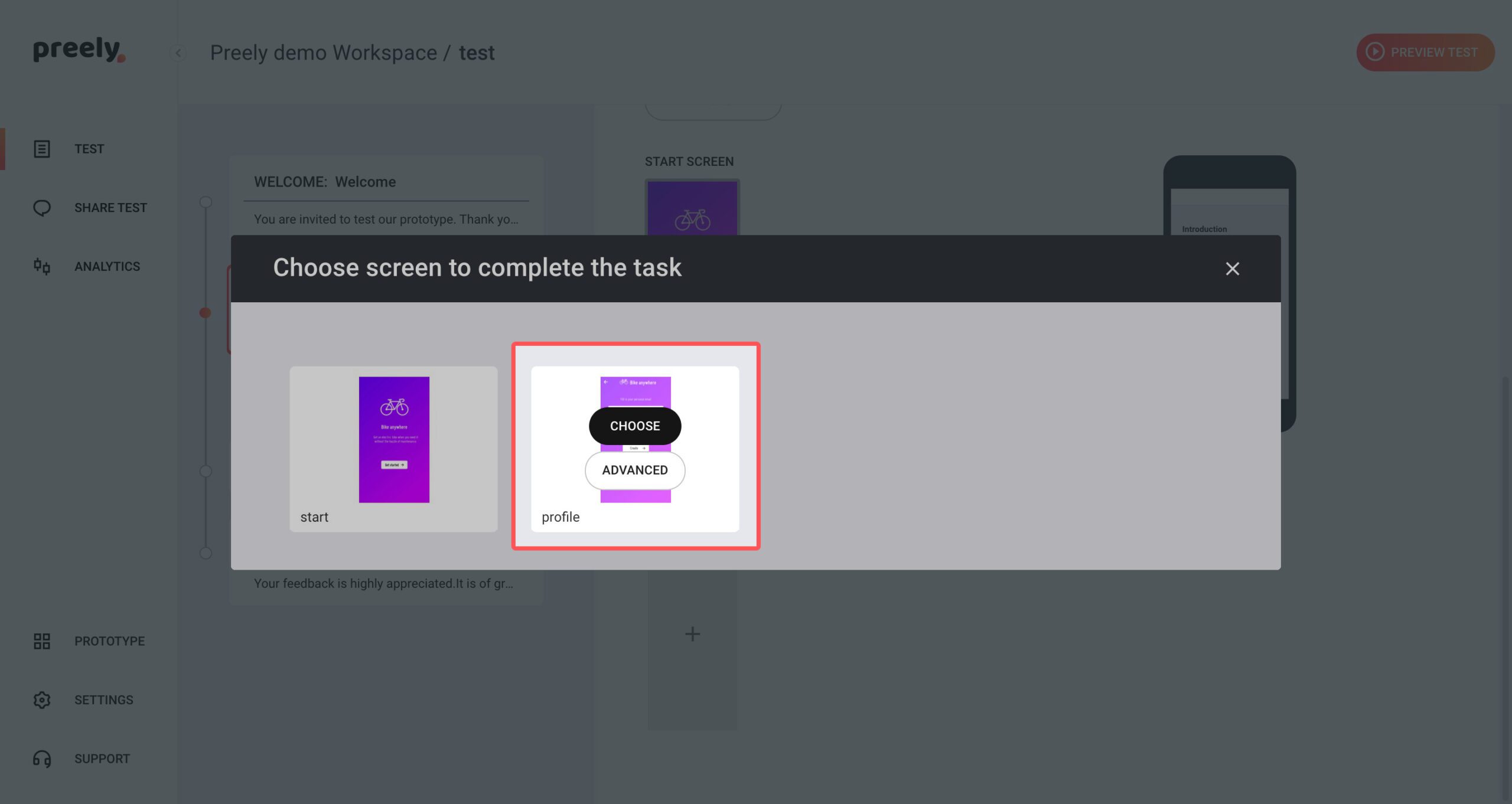

Remember to set an end screen on your tasks. This can either be a:

- ‘Endpoint’: This means that your participants should click on this certain spot in your prototype to end the task

- ‘Timer’: This means that you set a timer on the last screen in your test and after a set amount of time, the task is ended

2.2 Questions

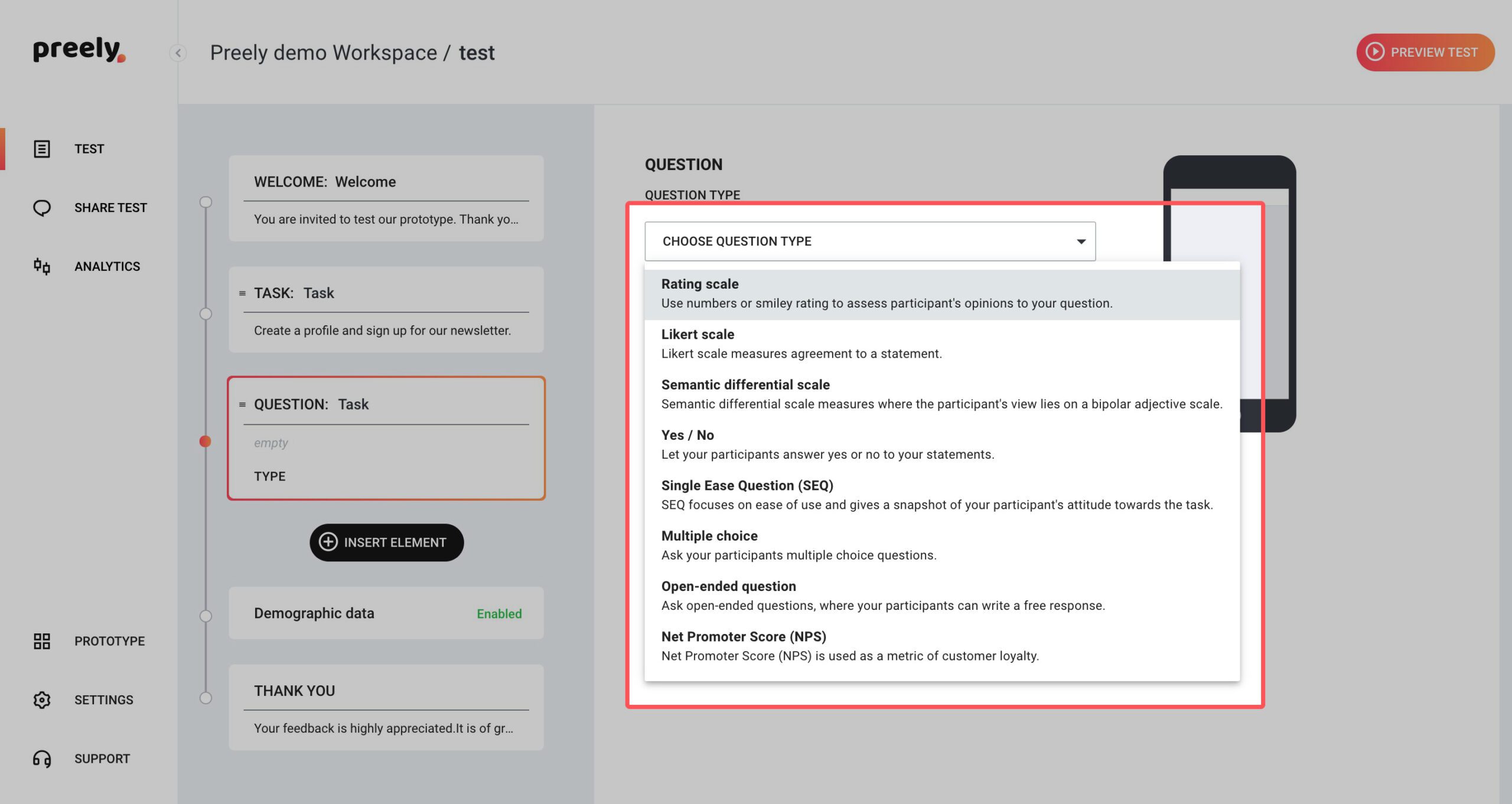

When you want to ask your participant questions in your test, you add questions to your test.

Here you can choose between different answer types:

- Rating and opinion scales, like Likert scales and semantic difference scales, smiley rating, Net Promoter Score (NPS)

- Multiple choice

- Open-end questions

You can set all questions to be optional and you have the opportunity of including a follow-up question for both rating questions and multiple choice.

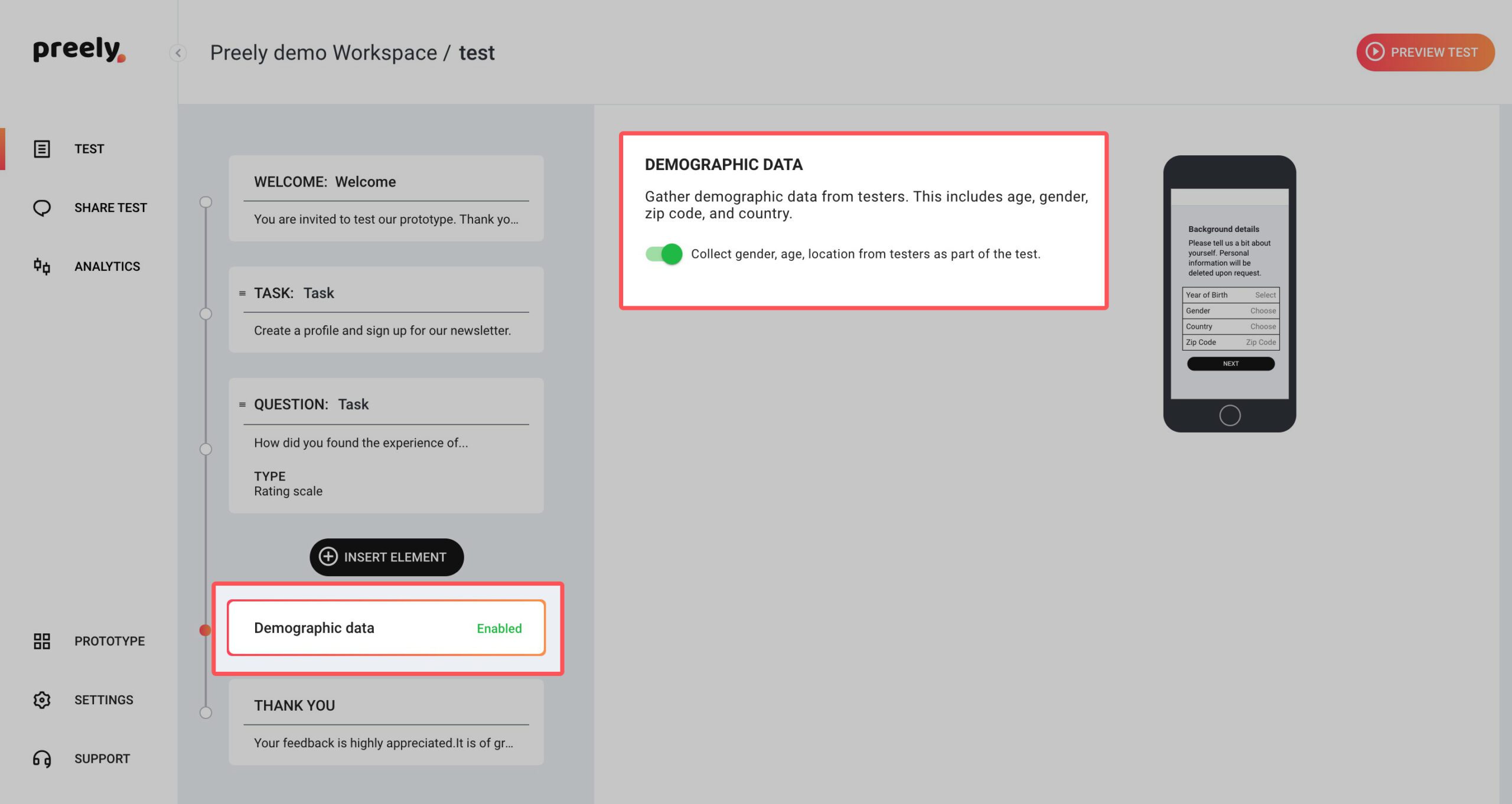

3. Demographic data

Here you can toggle between asking for demographic data or not. Demographic data include: Gender, age, zip code, and country.

4. Test completed

Then you’re at the end and you want to thank your participants for participating. We have written a short thank you note. If you want to change it, just write your own personal thank you.

Analytics – performance data

Success rate:

If the participant can perform the task or not.

Time on task:

How long it took the participants to complete the task.

Number of Paths:

Number of paths indicates if the participants are confused and have been looking multiple places before completing the task. The different paths are good indicators for the participants’ mental models (together with Actions).

Actions:

Here you can see how your participants have clicked throughout your prototype. This gives a very good indicator on the participants mental model (together with paths).

Screen statistics:

- Avg. time to first action: Indicates how big the participants’ cognitive strain have been

- Total visits compared to Unique visits: When the Total number of visits is high compared to Unique visits, this indicates confusion af where the participants have been searching and maybe they have started over. To this you can add Avg. time on screen as a good indicator for how difficult the task was

- Hotspot hit ratio: We want this high. If it’s low your participants have struggled in finding their way around your prototype

- Top 3 next screens: Great source for where your participants have been looking for relevant information

Note: You can click on the different Link spots and see how many have click in that specific area.

Paths:

Often designers have an ideal path in mind, when designing a digital product. Number of paths indicates if the participants are confused and have been looking multiple places before completing the task. The different paths are good indicators for the participants’ mental models.

Analytics – self-reported data

Under ‘Analytics’ in Preely you can easily get an overview over the chosen answers. Depending on the answer types you choose for your questions, you have different analytics options:

Opinion scales: Likert Scale & Semantic Differential Scale

On the ‘Summary page’ you can see average rating, together with the highest and lowest rating. If you click ‘Answers’ you see the distribution and answers across the scale and you can see who gave which rating.

For more information see Opinion scales: Likert Scale & Semantic Differential Scale.

Closed-ended questions: Multiple choice and Yes/No

On the ‘Summary page’ you can see top three of the chosen options for each question. If you click ‘Answers’ you see all chosen answers for the specific question.

For more information see ‘Closed-ended questions: Multiple choice & Yes/No’.

Open-ended questions: Free responce

On the ‘Summary page’ click ‘Answers’ on the question you want to see answers to, then you’ll see all answers for that specific question.

For more information see ‘Open-ended questions: Free response’.

Post test: Net Promoster Score (NPS)

On the ‘Summary page’ you can see how the ratings falls into the three categories. If you click ‘Answers’ you can see who gave which rating.

For more information see ‘Post test: Net Promoster Score (NPS)’.